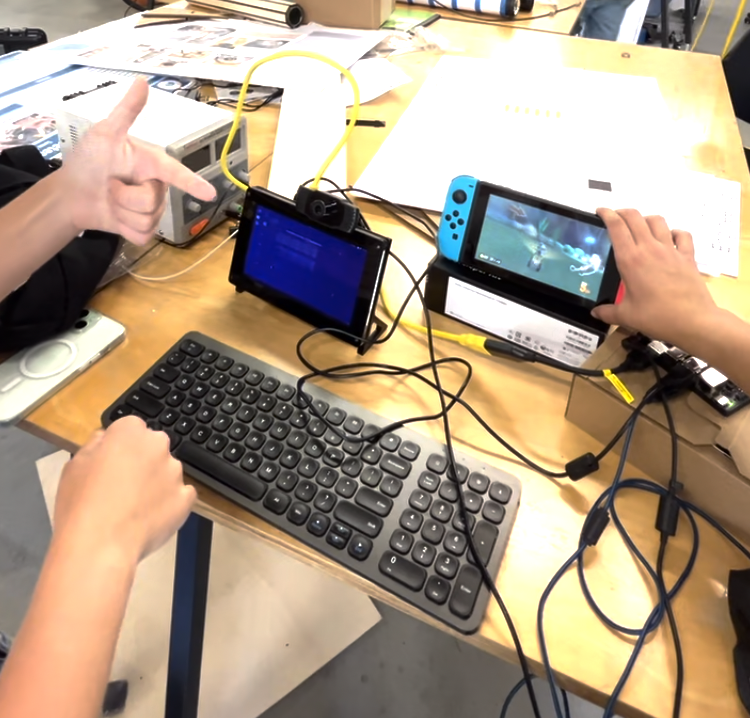

In this project, I hacked the Nintendo Switch to enable gesture-controlled gameplay using computer vision techniques. By leveraging YOLO for custom gesture recognition and running the solution on an Nvidia Jetson Orin, I emulated the Nintendo Switch Bluetooth protocol to spoof controllers, allowing for an innovative and interactive gaming experience.

Project Overview

The goal of this project was to develop a novel, vision-based control interface for the Nintendo Switch. Through the use of advanced computer vision algorithms, the system interprets hand and body gestures in real-time, translating them into gameplay commands. This approach opens up new possibilities for accessible and immersive game interaction.

Key Features

- Advanced Gesture Recognition: Custom-trained YOLO model for accurate and responsive gesture detection.

- Real-time Processing: Optimized computer vision pipeline running on Nvidia Jetson Orin for minimal latency.

- Bluetooth Protocol Emulation: Custom implementation to spoof Nintendo Switch controllers.

- Accessible Gaming: Motion-based control system that enhances gaming immersion.

Technical Details

The core of the project is built around a custom computer vision pipeline that detects specific gestures and interprets them as game inputs. This data is processed in real-time using an Nvidia Jetson Orin, ensuring low latency. The system then emulates the Nintendo Switch's Bluetooth protocol, effectively acting as a virtual controller. The result is an engaging gameplay experience that bypasses traditional input methods.